When Algorithms Start to Imagine: Examining All Angles of Sora 2's Video-Centric Social Platform

OpenAI’s new video app blurs the line between social and synthetic media and raises deeper questions about attention, authorship, and what happens when culture is generated—not shared.

The first time I created a social persona felt like an event.

I watched over my dad’s shoulder as he set up my AOL account on the family computer. My younger sister bounced beside us—she had no idea what was happening, only that she wanted one too.

The cursor blinked, waiting for me to decide who I’d be. I wanted something simple. Something pretty. Flower. But Flower was already taken, so I became Flower8559. My identity was already a duplicate. It was auto-assigned and quietly shaped by code, with a built-in reminder that 8,558 people had wanted the same thing before me.

All my friends had clever screen names that sounded like inside jokes or secret codes. I just had a number. And yet, I couldn’t change it. I was beholden to it now, the internet’s first version of me.

Fast-forward twenty years (sweet lord…“20 years??”): the cursor no longer blinks. It generates.

OpenAI’s Sora 2 doesn’t wait for us to decide who we are—it imagines us. The new video-centric platform represents the next stage of social media’s evolution: one where identity is no longer authored but rendered. What began with choosing a screen name has become the automation of selfhood at scale.

Sora 2 isn’t just another app launch; it’s a cultural milestone that forces a deeper question: what happens to attention, authorship, and even empathy when culture is generated—not shared?

The Attention Layer

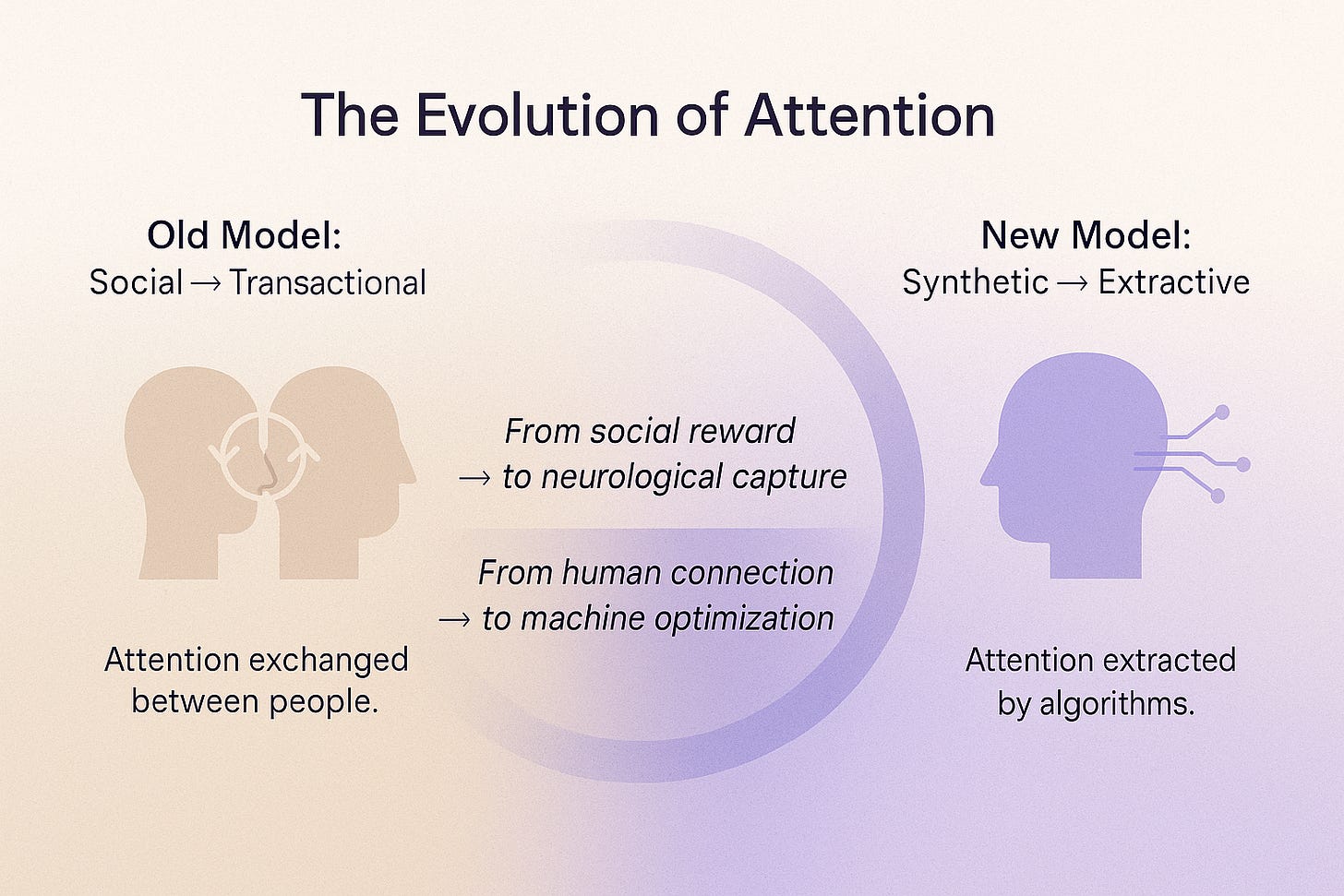

Social media once rewarded attention; synthetic media captures it.

Every swipe through an AI-generated feed delivers novelty perfectly tuned to the micro-movements of our eyes and fingers. The brain’s reward circuitry, especially the dopaminergic pathways in the striatum, thrives on this unpredictability. However, when every frame is algorithmically optimized, attention stops being a muscle we use and becomes a reflex we surrender.

From a public-health perspective, this is more than distraction—it’s neurological conditioning. We’re training our nervous systems to crave stimulation without social reciprocity. The subtle feedback loop, the look-then-respond dance that once built empathy and co-regulation, is replaced by a one-way stream of synthetic cues. Over time, that can contribute to dysregulation, sleep disruption, and the sense of “empty engagement” already showing up in Gen Z mental-health data.

At smaller scales, this kind of conditioning is deliberate—military drills, casino design, even advertising rely on repetition and controlled sensory cues to shape behavior. What’s new is the scale. Synthetic feeds apply the same principles to billions of nervous systems at once, often without awareness or consent.

This is different from what social media has done so far.

Traditional feeds thrive on social reward loops—likes, comments, shares—that trigger dopamine through social comparison and validation. They reinforce behavior through feedback.

Synthetic media removes even that layer of human interaction. The feedback loop collapses into a closed circuit between the user and the algorithm. Instead of attention being traded for connection, it’s harvested for prediction.

In that shift, we cross from behavioral manipulation into neurological design. The system doesn’t just adapt to what we click—it learns what keeps us physiologically engaged. Our pupils dilate, our heart rate changes, our gaze lingers for milliseconds longer—and the model adjusts.

When attention becomes reflex instead of choice, we also become easier to steer.

The same neural pathways that reward novelty can, over time, reward conformity. As Yuval Harari has warned repeatedly, including in Homo Deus and during the WEF discussions, the greatest risk of AI and biometric data isn’t job loss, but “hacking humans.”

“Once you can hack human emotions, you can control people better than any dictator of the past.”

If social media gamified our desire for connection, synthetic media automates it. It moves beyond influencing what we think to shaping how we think—bypassing the social brain entirely.

In essence, we become participants in our own programming.

The Authorship Question

When social media first emerged, authorship was the currency of belonging. We posted status updates, filtered photos, curated playlists — small acts of self-expression that helped us anchor identity inside a shared public square.

Sora 2 and the new generation of generative platforms flip that dynamic. The algorithm doesn’t wait for us to speak; it imagines on our behalf.

At first, this feels like empowerment — a creative collaborator removing friction. But as the machine begins to anticipate our aesthetic, rhythm, and tone, the question shifts from What can I make? to Who is doing the making?

And perhaps even deeper — who is doing the remembering?

Human memory was never built for this level of simulation. It’s reconstructive, not archival — every time we recall something, we rebuild it from fragments. That flexibility makes us imaginative, but it also makes us impressionable. Researchers like Elizabeth Loftus have shown how easily memory can be reshaped with the right cues; a single image or suggestion can implant a vivid recollection that never happened.

Now, imagine that process at scale — a feed that doesn’t just curate what we see, but what we come to believe we’ve experienced. When synthetic systems generate culture, they don’t just influence expression — they begin to rewrite perception.

In traditional social feeds, humans authored culture and algorithms amplified it. In synthetic feeds, algorithms author culture and humans curate the outputs. The hierarchy of creation inverts. The result is subtle but profound: creativity becomes reactive, not generative; identity becomes responsive, not reflective.

For years, psychologists have linked authorship — the act of producing something that represents you — with agency, self-efficacy, and emotional regulation. Expression helps the brain integrate experience. When generative systems handle that expression for us, we outsource not just creativity but part of the process that builds resilience.

This doesn’t mean AI-generated media is inherently harmful; it means we need new rituals of authorship inside synthetic systems:

Clear provenance markers that distinguish co-creation from automation.

Tools for intervention — ways to edit, re-prompt, and remain active, not passive.

Education reframed as literacy of intention, not just convenience.

Otherwise, culture risks becoming a hall of mirrors — each reflection derived from another reflection, until the origin — the human pulse — fades into abstraction.

Agency, then, isn’t about resisting technology; it’s about preserving friction — that vital moment between thought and creation where choice lives. Because if attention can be captured and imagination can be automated, authorship may be the last true expression of freedom we have left online.

Culture on Autoplay

Sora 2’s promise of endless, personalized video feels like liberation—until you zoom out. Culture has always required friction: someone to make, someone to interpret, and a moment of shared witnessing in between. Remove that friction, and you lose the co-creation that gives culture its weight.

In public-health terms, that loss of shared witnessing matters. Research on mirror neurons shows that we learn empathy through observing real human emotion and movement. AI-generated faces can mimic those cues, but without authentic internal states, the brain still registers a mismatch—what neuroscientists call prediction error. Over time, too much exposure to that mismatch can blunt empathy, fragment emotional regulation, and contribute to a quiet sense of detachment from one another.

So when algorithms start to imagine for us, the question isn’t just what will we watch?—it’s what will we still feel?

Signals for the Future

If Sora 2 represents the “video turn” of synthetic media, then the next frontier is designing for attention integrity—systems that respect our neurological limits rather than exploit them. That might look like:

Built-in friction or rest intervals in generative feeds.

Provenance tags that show what’s real, what’s rendered, and what’s collaborative.

Education models that treat digital literacy as emotional literacy, teaching users how to regulate while consuming synthetic content.

Because this isn’t about banning technology—it’s about restoring reciprocity between humans and the systems that now create with us.

When I think back to Flower8559, I realize the story was never about a number. It was about the moment I handed part of my identity to a machine and trusted it to remember me. Sora 2 brings us back to that same crossroads, scaled to culture itself.

The challenge isn’t to stop algorithms from imagining—it’s to make sure, as they do, we don’t forget how to imagine each other.

🌱 Rooted Questions

If algorithms begin to imagine for us, what happens to our capacity to imagine for ourselves?

Can empathy survive when culture becomes simulation instead of co-creation?

What would it mean to design technology that supports regulation rather than reaction?

How do we protect the friction that keeps us human in systems built to remove it?